We aim to expand the realm of robots beyond assembly lines and other well-structured environments. For robots to leave these large-scale industrial environments, they will need to perceive and understand their surroundings. Cameras can play an important role in this, as they provide dense information streams whilst being relatively cheap. We believe computer vision is an important component of any general-purpose robot.

Computer vision has made tremendous progress in areas like classification, detection, and scene understanding, often powered by deep learning. However, there are quite some open questions in computer vision for robotics. At AIRO we try to tackle some of these open questions and we apply these techniques on real-world robots.

Spatially-structured Representations

One line of research focuses on determining the appropriate way to represent scenes. Ideally we want compact representations that accurately capture all relevant state information for the task at hand. At AIRO, we focus on spatially-structured representations such as semantic keypoints.

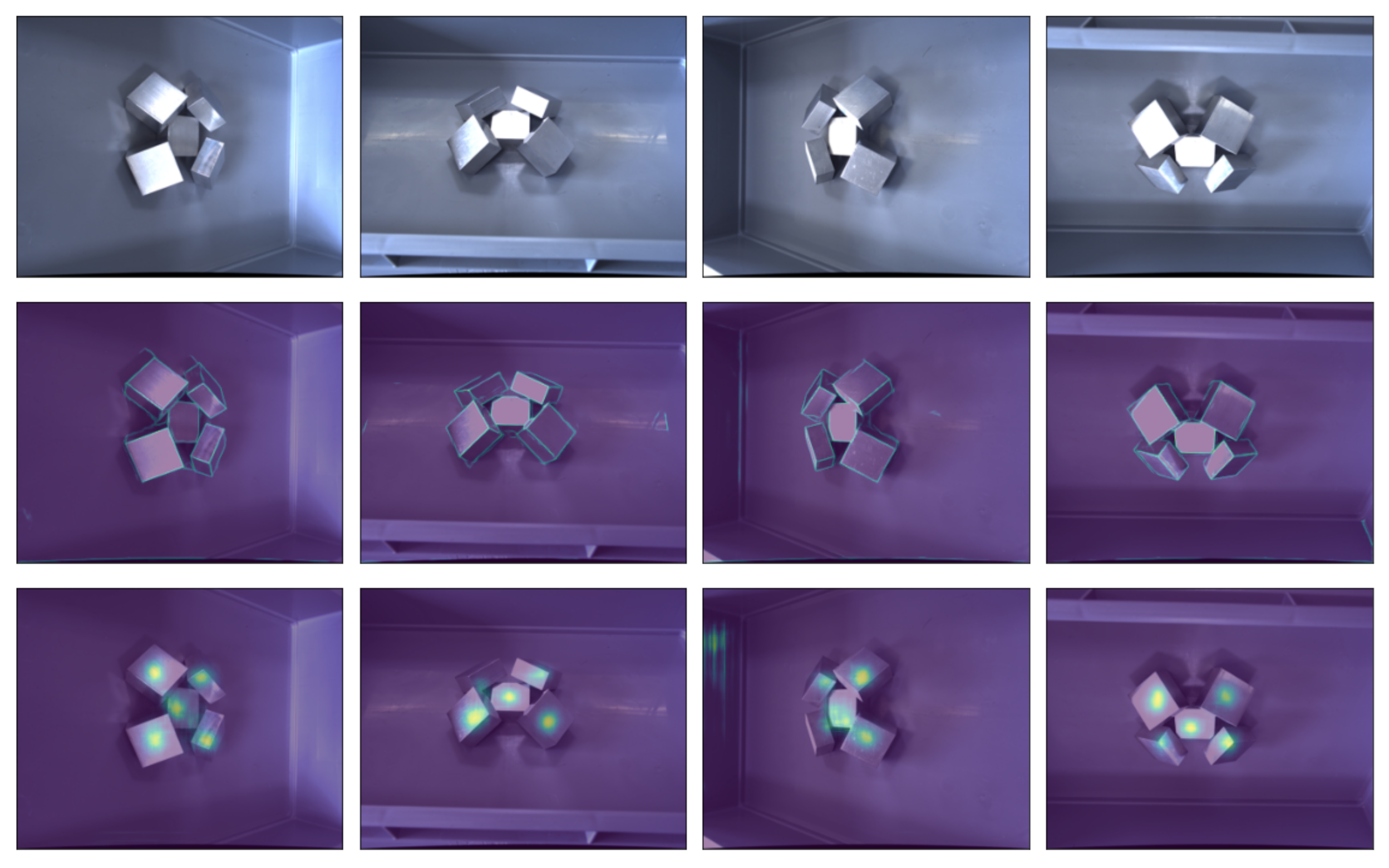

From left to right: keylines scene representation and semantic keypoint detection on cardboard boxes.

Procedural Data Generation

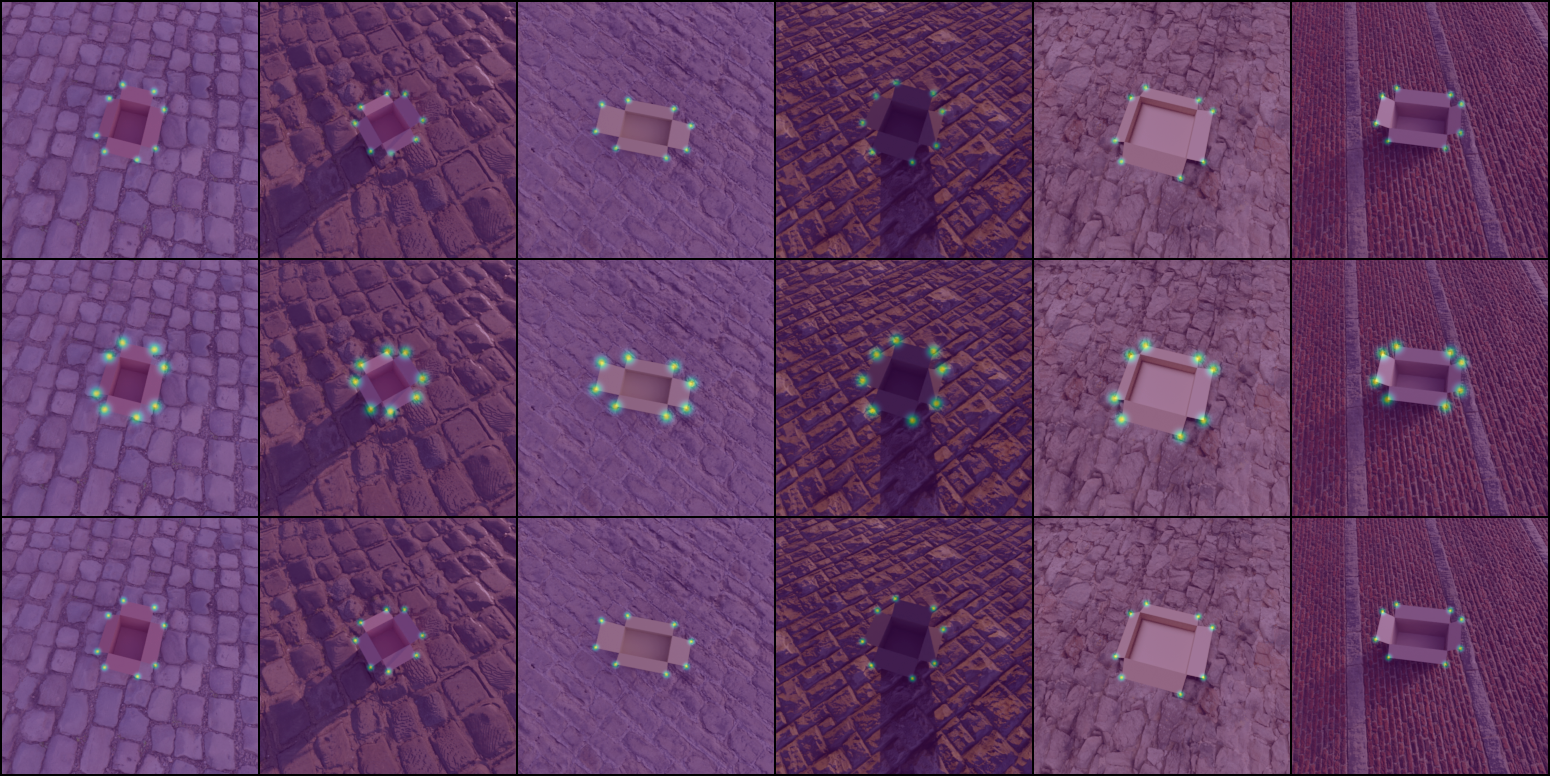

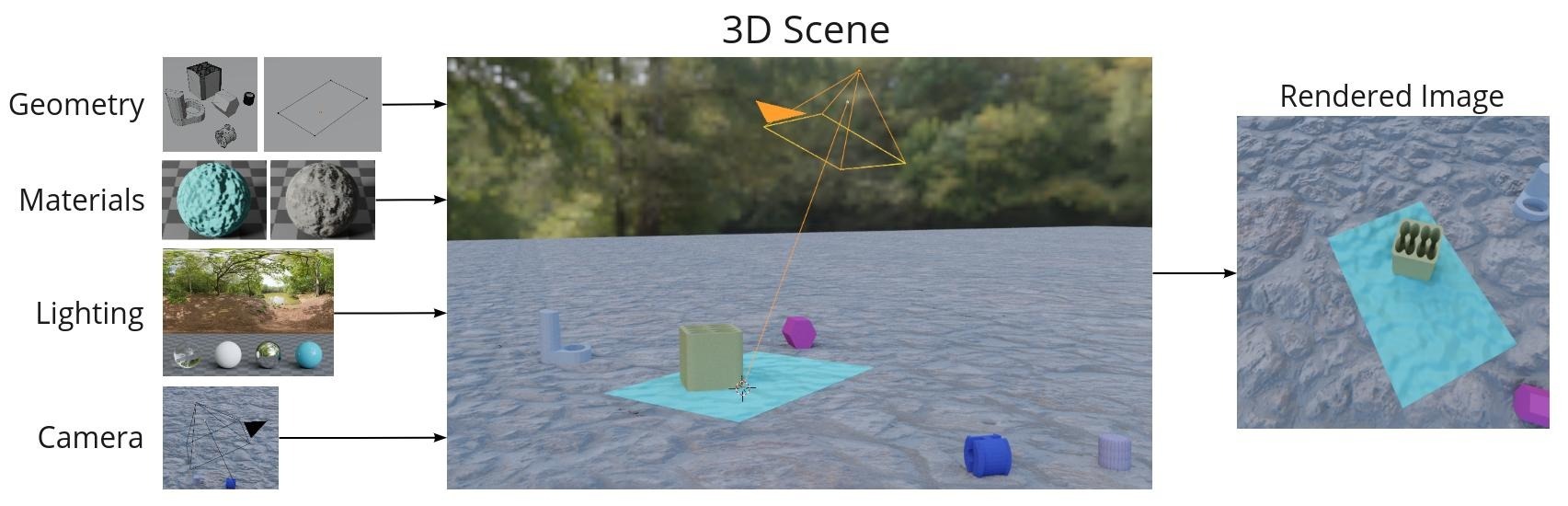

We use synthetic data and procedural data generation to reduce the need for manual data collection in the real-world, which is expensive and might not even be possible. Using procedural data generation enables us to create data containing all desired variations in object configuration (especially important for deformable or articulated objects, which have many degrees of freedom), lighting conditions, backgrounds, distractor objects, etc.. These datasets can then be used to learn representations and transfer the learned networks to the real world.

A Blender pipeline for procedural generation of cloth scenes.

Efficient Inference

Finally, we also work towards making deep learning inference more efficient, to make sure we can run our neural networks on the embedded hardware that robots have at their disposal.

Application Areas

The techniques we develop can be applied to several domains. We focus on challenging object categories such as deformable objects (cloth in particular),highly-reflective industrial objects and articulated objects. We have a.o. created various high-quality datasets, including one of humans folding cloth here and a dataset of both synthetic and real industrial metal objects here. Another application area is learning the dynamics of robots or other mechanical systems.

Example application: learning a progress metric for cloth folding from human demonstrations.

Publications

Learning keypoints from synthetic data for robotic cloth folding

In ICRA 2022 Workshop on Representing and Manipulating Deformable Objects

2022

Robotic cloth manipulation is challenging due to its deformability, which makes determining its full state infeasible. However, for cloth folding, it suffices to know the position of a few semantic keypoints. Convolutional neural networks (CNN) can be used to detect these keypoints, but require large amounts of annotated data, which is expensive to collect. To overcome this, we propose to learn these keypoint detectors purely from synthetic data, enabling low-cost data collection. In this paper, we procedurally generate images of towels and use them to train a CNN. We evaluate the performance of this detector for folding towels on a unimanual robot setup and find that the grasp and fold success rates are 77% and 53%, respectively. We conclude that learning keypoint detectors from synthetic data for cloth folding and related tasks is a promising research direction, discuss some failures and relate them to future work. A video of the system, as well as the codebase, more details on the CNN architecture and the training setup can be found at

https://github.com/tlpss/workshop-icra-2022-cloth-keypoints.git.

Learning self-supervised task progression metrics : a case of cloth folding

APPLIED INTELLIGENCE

2023

An important challenge for smart manufacturing systems is finding relevant metrics that capture task quality and progression for process monitoring to ensure process reliability and safety. Data-driven process metrics construct features and labels from abundant raw process data, which incurs costs and inaccuracies due to the labelling process. In this work, we circumvent expensive process data labelling by distilling the task intent from video demonstrations. We present a method to express the task intent in the form of a scalar value by aligning a self-supervised learned embedding to a small set of high-quality task demonstrations. We evaluate our method on the challenging case of monitoring the progress of people folding clothing. We demonstrate that our approach effectively learns to represent task progression without manually labelling sub-steps or progress in the videos. Using case-based experiments, we find that our method learns task-relevant features and useful invariances, making it robust to noise, distractors and variations in the task and shirts. The experimental results show that the proposed method can monitor processes in domains where state representation is inherently challenging.