open-source

At AIRO we value open-source hardware, software and datasets. This page offers a selection of interesting tools and datasets that we offer.

Datasets

Folding dataset

In our unique cloth folding dataset, we provide 8.5 hours of demonstrations from multiple perspectives leading to 1,000 folding samples of different types of textiles. The demonstrations are recorded in multiple public places, in different conditions with a diverse set of people. Our dataset consists of anonymized RGB images, depth frames, skeleton keypoint trajectories, and object labels. The dataset is available on this GitHub repository.

Hyperspectral camera dataset

This dataset entails a close range hyperspectral camera dataset with high temporal resolution of strawberry with eco-physiological data of one leaf. Fully available on Zenodo.

DIMO: Dataset of Industrial Metal Objects

The DIMO dataset is a diverse set of industrial metal objects. These objects are symmetric, textureless and highly reflective, leading to challenging conditions not captured in existing datasets. Our 6D object pose estimation dataset contains both real-world and synthetic images. Real-world data is obtained by recording multi-view images of scenes with varying object shapes, materials, carriers, compositions and lighting conditions. Our dataset contains 31,200 images of 600 real-world scenes and 553,800 images of 42,600 synthetic scenes, stored in a unified format. The close correspondence between synthetic and real-world data, and controlled variations, will facilitate sim-to-real research. Our dataset's size and challenging nature will facilitate research on various computer vision tasks involving reflective materials. The full dataset and labeling tool is available on this GitHub repository.

Almost-Ready-To-Fold (aRTF) Clothes Dataset

We have created a dataset of almost-flattened clothes in which we mimic the output of existing robotic unfolding systems by applying small deformations to the clothes. The dataset contains a total of 1,896 images of 100 different garments in 14 different real-world scenes. We refer to this dataset as the aRTF Clothes dataset, for almost-ready-to-fold clothes. There have already been some efforts to collect datasets for robotic cloth manipulation, but to the best of our knowledge, this dataset is the first to provide annotated real-world of almost-flattened cloth items in household settings. The dataset is available on this GitHub repository.

Cloth Manipulation Competition Dataset and Code

Regrasping garments in the air is a popular cloth unfolding strategy. To get started, it’s common to grasp the lowest point. However, better grasp points are needed to unfold the garment completely, such as the shoulders of a shirt or the waist of shorts. The objective of the ICRA2024 cloth manipulation competition was to localise good grasp poses in colour and depth images of garments held in the air by one gripper. We evaluated the predicted grasps by executing it on a dual UR5e setup live at ICRA. The cloth items that must be grasped will be towels, shirts and shorts. The dataset, code and details are available on this page.

Glasses-in-the-Wild Dataset

The Glasses-in-the-Wild dataset is a collection of 1,000 RGB images of transparent and partially filled glasses, captured in diverse real-world environments. It was crowdsourced from 11 participants and includes 93 unique glass types across 60 different scenes with varying backgrounds, lighting conditions, reflections, occlusions, and distractors. Each image is annotated with bounding boxes and semantically meaningful keypoints, including the rim, base, and liquid level, to facilitate the training of models for transparent object detection and liquid level estimation. The dataset contains a broad distribution of liquid levels: 24.3% of glasses are empty, while 75.7% contain liquid, with an average fill of 48%. This dataset complements existing transparent object datasets by providing a wider variety of glass shapes, sizes, colors, and real-world conditions, supporting robust training for robotic perception systems and other computer vision applications involving transparent containers. The dataset is available on Zenodo.

Software

AIRO-MONO Repo

The AIRO-MONO Repo provides ready-to-use Python packages to accelerate the development of robotic manipulation systems. The goals of this repo are to

- facilitate our research and development in robotic perception and control

- facilitate the creation of demos/applications such as the folding competitions by providing either wrappers to existing libraries or implementations for common functionalities and operations.

Pytorch-Hebbian

A lightweight framework for Hebbian learning based on PyTorch Ignite. Can be found on GitHub. Presented at the Beyond Backpropagation NeurIPS 2020 worskhop.

- PyTorch-Hebbian : facilitating local learning in a deep learning frameworkIn NeurIPS 2020 Beyond backpropagation Workshop 2020

Hardware

Gloxinia

Gloxinia is a new modular data logging platform, optimised for real-time physiological measurements. The system is composed of modular sensor boards that are interconnected depending upon the needs, with the potential to scale to hundreds of sensors in a distributed sensor system. Two different types of sensor boards were designed: one for single-ended measurements and one for lock-in amplifier based measurements, named Sylvatica and Planalta respectively. Both hardware and software have been open-sourced on GitHub.

MIRRA

MIRRA is a modular and cost-effective microclimate monitoring system for real-time remote applications that require low data rates. MIRRA is modular, enabling the use of different sensors (e.g., air and soil temperature, soil moisture and radiation) depending upon the application, and uses an innovative node system highly suitable for remote locations. The entire system is open source and can be constructed and programmed from the design and code files, hosted on GitHub.

Smart Textile

The Smart Textile is a wireless, integrated system for instrumentation of cloths. Its sensor matrix detects local pressure variations, mapping them to 8-bit values that can be read using Bluetooth Low Energy. This data can be used to distinguish different states, e.g. folds, of the Smart Textile and thus of any larger cloth it is sewn onto. As such, a new modality for state observation in textile manipulation tasks is obtained. With an output rate of 50+ Hz and a battery life of 15+ hours, the Smart Textile system is aptly suited for in-vivo cobot learning. Both hardware and software are freely available on GitHub.

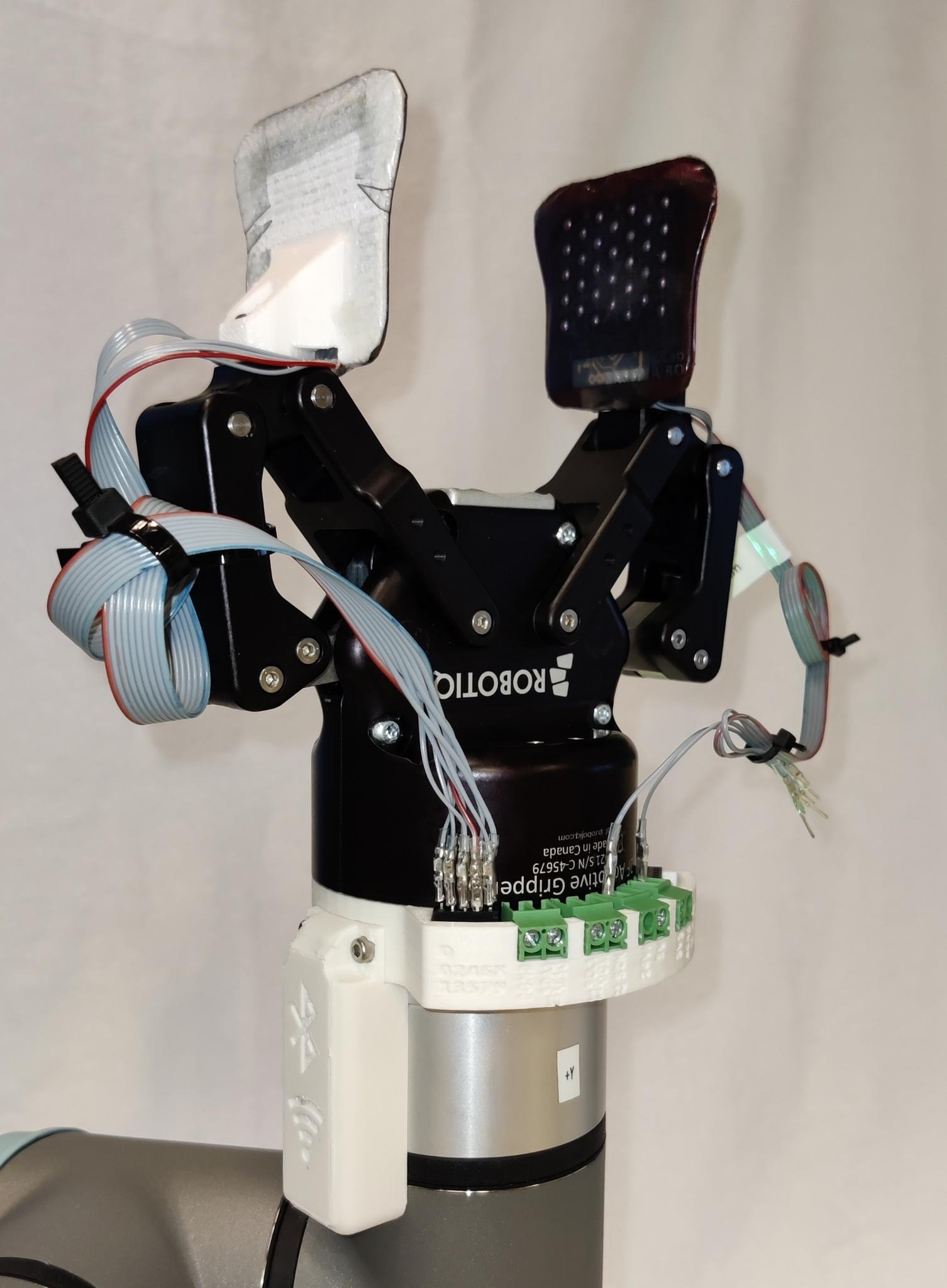

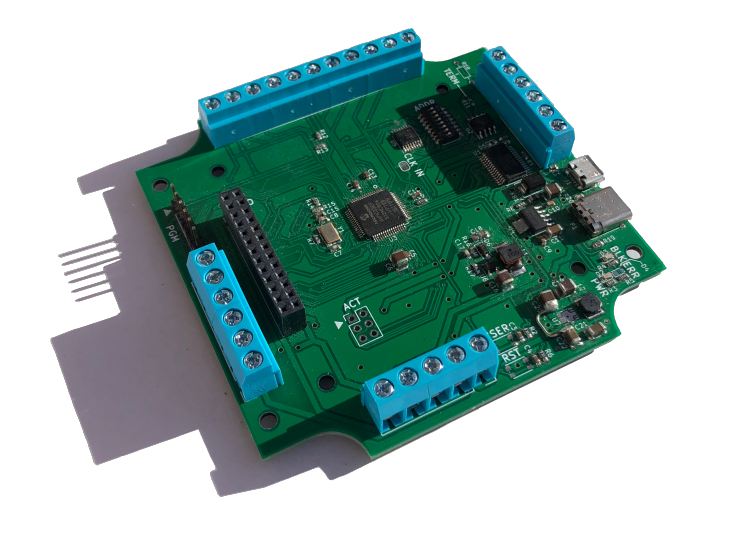

Halberd

The Halberd coupling is a replacement of the Robotiq I/O Coupling enhanced with a Nina B301 microcontroller. It was created for seamless integration of tactile sensors on Robotiq grippers to enhace the manipulation capabilities of our cobots: no more external power or data cables! Halberd features extensive GPIO, several communication interfaces, and safety circuitry similar to that of the Robotiq I/O Coupling itself. It is Arduino compatible and programmable over a micro USB interface. Both hardware and software are freely available on GitHub.